Thursday, December 16, 2010

Taking the hassle out of scheduling Automated Test Runs

Friday, December 10, 2010

Uncluttering your tasks using Task Boards

Many Agile and iterative Waterfall teams have simplified this process by using Task Boards. If you've never used a Task Board, simplicity is what makes it interesting. A Task Board is simply a list of all the tasks a developer has to work on. It is normally divided into 3 columns:

1. To Do - List of things I've not started

2. In Progress -Things I am currently working on

3. Completed - Stuff I've completed

To utilize this, most teams simply use a whiteboard with sticky notes to identify these tasks, here is an example:

So as we begin working on things, we simply move the sticky notes to In Progress. Once we complete them, we move them to the Completed area. As new tasks come in, they go into the To Do column. By having this front and center for the team to see, we all know the status of our tasks.

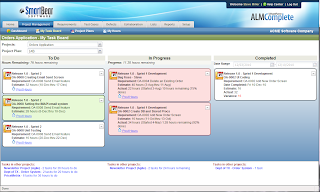

After working with this a while, we thought it would be cool to have our Application Lifecycle Management (ALM) (http://www.softwareplanner.com) tool display Task Boards. So I asked my team to come up with a simple yet effective way of showing this online. They came up with a cool design. As a developer, you can access your Task Board and see all your tasks, just as you can by using a whiteboard. However, they took it a step further. For each task, they show:

1. What requirement it is related to

2. How many hours have been worked thus far

3. How many hours are left (and pct complete)

4. The critical dates (estimated start and finish dates)

They even extended it by showing any tasks that are due today in green and tasks that are overdue in red. That allows you to quickly spot the ones that might be slipping. This new feature is slated to go to production in ALMComplete (http://www.softwareplanner.com) sometime this month (December 2010). Here is a quick preview of what it looks like and here is a quick movie that shows how it works:

http://www.softwareplanner.com/movies.asp?Topic=TaskBoards

What do you think?

Tuesday, October 12, 2010

Using Retrospectives to Improve Future Testing Efforts

Click here: http://www.softwareplanner.com/guidedtours/edgeui/Camtasia.asp?Filename=UnitingPart05) to begin the presentation. You will learn:

1. What a retrospective is and how it can help your team improve

2. How to conduct a retrospective

3. How to improve future releases by applying what you learn in retrospectives

Friday, September 24, 2010

Software Planner New Release - version 9.4.2

We just issued a new release of Software Planner to production (Release 9.4.2) and it has several new features:

- JIRA Integration – Allows synching of JIRA issues with Software Planner defects. Issues can originate or be updated in either system and will be synced.

Movie: http://www.softwareplanner.com/guidedtours/edgeui/Camtasia.asp?filename=SPJIRAIntegration

User’s Guide: http://www.softwareplanner.com/UsersGuide_swpSyncJIRA.pdf - HP Quick Test Pro (QTP) Integration – Allows automation engineers to run QTP scripts and have the run results appear in Software Planner lists and dashboards.

Movie: http://www.softwareplanner.com/guidedtours/edgeui/Camtasia.asp?filename=SPQTPIntegration_Overview

User’s Guide: http://www.softwareplanner.com/UsersGuide_QTP.pdf - Subversion Integration – Allows automation teams to associate subversion source code with Software Planner requirements, test cases and/or defects.

Movie: http://www.softwareplanner.com/GuidedTours/EdgeUI/Camtasia.asp?FileName=SVNIntegration

User’s Guide: http://www.softwareplanner.com/UsersGuide_SVN.pdf - Microsoft Project Integration – Allows importing and exporting projects from Software Planner to/from Microsoft Project.

Movie: http://www.softwareplanner.com/guidedtours/edgeui/Camtasia.asp?filename=ImportMSProject - Record Locking Feature – Automatically detects if others are editing a record to prevent data collisions.

Movie: http://www.softwareplanner.com/guidedtours/edgeui/Camtasia.asp?filename=RecordLocking

Wednesday, September 8, 2010

Optimizing your Test Efforts during the QA cycle

- How to determine how your QA cycle is progressing

- How to determine your defect resolution rate

- How to use metrics to determine the stability and production readiness of your software release.

You can see the newsletter at: http://www.softwareplanner.com/newsletters/newsletter_2010_09_sp.htm

Monday, August 9, 2010

Uniting your Automated and Manual Testing

http://www.softwareplanner.com/guidedtours/edgeui/camtasia.asp?filename=UnitingPart03

Monday, July 19, 2010

Pragmatic Software is Now SmartBear Software

Pragmatic Software is Now SmartBear Software

You may have heard of the other sister company, the original Smart Bear Software, the peer code review company that created CodeCollaborator. The new SmartBear now includes the old Smart Bear and of course AutomatedQA, which is well known for its TestComplete automated testing and AQtime performance profiling tools. (You may also know that TestComplete works exceptionally well with SoftwarePlanner for test case management).

How will this benefit our users?

For one, our community of users has grown to more than 75,000 software developers and testers with the formation of the new company. We look forward to helping you take advantage of this much larger SmartBear community for expert advice, more support, knowledge sharing and of course improved software quality. Keep an eye out as we start to share tips and tricks, white papers, and other best practices that we hope will help you produce better software.

The combined company is also much better equipped to push product development forward. We are already working on a bunch of new ideas that will not only make it easier for our products to work together, but also put great new functionality in the hands of our users. Stay tuned – you will hear much more from us about automated testing, test management, code review, performance profiling, and development management.

Why did we change our name?

As AutomatedQA, Smart Bear Software and Pragmatic Software worked together to become one company, we had to select a name that we felt reflected our brand values: to be community-focused and offer innovative tools that are highly functional and at the same time actually affordable. Also, now that we have tools that support the immediate needs of both, developers and testers, we needed a name that wasn’t tied to one particular type of product. So we and our sister companies chose the name of one of our very successful existing brands to represent us all going forward. We also think the name is a lot more fun and interesting than using two or three letter acronyms. We hope you like our decision!

Here’s the new logo:

What do you think? Just a few minor adjustments of the original Smart Bear logo, which we thought was pretty cool to begin with as well.

Visit our existing Community page to learn how you can follow us and communicate and share with one another as we continue to add to our products, enhance our web site, and grow our community involvement.

If you want more details regarding the announcement, click here, and stay tuned for some exciting product news in the days and weeks ahead.

Are you curious about all the other tools that are now part of the SmartBear family? Ever needed to increase your test coverage or find those annoying performance issues or memory leaks? Or even find issues before they become bugs through fast and pain-free code review?

Tell your friends, give them a try and check out any of our tools for free:

What’s your take on the New SmartBear? Let us know, leave us a comment, Tweet us or drop us a line.

Friday, July 2, 2010

Best Practices for Planning your Manual Test Effort

Best Practices for Planning your Manual Test Effort

Click on the URL: http://www.softwareplanner.com/guidedtours/edgeui/Camtasia.asp?filename=UnitingPart02 to begin the presentation. You will learn:

1. How to improve the effectiveness of your requirements definition

2. Best practices for creating manual test cases for each requirement

3. How to ensure you have good test coverage for traceability for each requirement

4. How to best organize your manual tests

Friday, June 4, 2010

Best Practices for Planning your Automated Test Effort

Best Practices for Planning your Automated Test Effort

Click on this link (http://www.softwareplanner.com/guidedtours/edgeui/Camtasia.asp?filename=UnitingPart01) to begin the presentation. You will learn:

1. How to identify what test cases should be automated

2. How to best organize your automated test cases

3. How to version and protect your automated test cases

4. How to schedule your automated test cases to run periodically

Friday, May 14, 2010

5 Benefits of Daily Builds

To see the full article, click here: http://www.softwareplanner.com/newsletters/Newsletter_2010_05_SP.htm

Tuesday, April 20, 2010

Uniting your Automated and Manual Test Efforts

To see the full article, click here:

http://www.softwareplanner.com/Newsletters/newsletter_2010_04_SP.htm

8 Best Practices for Managing Software Releases

Many software releases extend longer than expected and while sometimes project slippage is unavoidable, there are some clear cut best practice fundamentals that you can employ to reduce the chance of slippage.

See the full article here: http://www.softwareplanner.com/Newsletters/newsletter_2010_03_SP.htm.

Thursday, February 11, 2010

Powerful Metrics for Developers

What are Powerful Metrics?

Metrics are simply a way to measure specific things you are doing, but you could create hundreds of irrelevant metrics that really don't move you any closer to solving your everyday problems. Powerful metrics are a small set of indicators that are targeted to help you accomplish a specific set of goals.

Metrics Driven by Specific Goals

Before defining your metrics, ask yourself "What goal(s) am I trying to accomplish?", then write your goals down. For example, typical goals for a developer might be to:

- Improve my estimating skills

- Reduce defect re-work

- Ensure that my code is tight and re-usable

“Improve my estimating skills"

To do this, you first need to record the actual time you work on assigned tasks as compared to the original estimates. The difference between the estimate and actual is the "variance". At the end of a project, determine your overall variances to determine how well you track against estimated hours. If you find that your variances are over 10%, consider buffering your estimates on the next project by the variance amount. For example, take the example below:

In the example above, buffering your estimates allow you to become a better estimator. After tracking this for a few releases and buffering your estimates, you will begin providing more accurate estimates. If your team is using Software Planner, you can run variance reports that automatically calculate the information above, below is an example report:

“Reduce defect re-work”

Software releases often take much longer than needed because defects are not resolved on the first round and it adds time to the release timeline when developers have to fix the same issue multiple times and testers have to regress those changes over and over again. Many times defects are re-worked 5 or more times before they are correctly fixed.

To resolve this issue, you must first have an appreciation for how often this is happening. One strategy for this is to add a field to your defect tracking solution that indicates that a defect is being re-worked. If your defect tracking solution has auditing capabilities, it should be easy to produce a report or dashboard that counts the number of times defects are re-worked. Below is a dashboard generated from Software Planner that shows defect re-work:

By knowing this, you can work on reducing re-work by employing these techniques:

- Better steps-to-reproduce - Many times re-work happens because the tester has not provided enough steps to reproduce the issue consistently. Work with your QA team on providing really great reproducible steps. Even better, have them record the steps into a movie that show how to reproduce the issue. This can quickly be done by using Jing (http://www.jingproject.com/), a free utility that allows you to record an issue and it creates a link so that the developer can see it in action.

- Better Unit Testing - Sometimes developers rush through the development and do not fully test it before sending it back to QA. This takes discipline, but if you take the time to fully test it before sending it back to your QA team, it will save you time in the long run.

- Peer Code Review - Another set of eyes on your code can help you reduce re-work. Consider asking a peer to review your code before compiling it and sending it to your QA team. You can speed up code reviews by using tools like Code Collaborator.

To do this, you must do peer code reviews. By having others inspect your code, you will begin to write tighter and more reusable code. To measure the effectiveness of this, measure the number of defects found during code review versus defects found during quality assurance. This will quickly identify how code review leads to a reduction in defects found during QA, which is more costly to fix than during development. Keep track of code reviews and defect statistics, below is an example:

You can speed up code reviews by using tools like Code Collaborator.

Summary

Dedicate yourself to improving your job by identifying your goals and tracking metrics that help you determine how you are trending towards your goals. The metrics listed above work fine for my team but I would like to hear what metrics you find are helpful in your organization. To communicate that to me, please fill out this survey.

If we get great feedback from this, we will publish the results in our next month's article so that we can all learn from each other.

Sign Up Today

Start improving your project efficiency and success by signing up for our monthly newsletters today.

Want a FREE BOOK of code review tips from Smart Bear Software?

You may not realize it, but Software Planner has a sister - it's Smart Bear Software. Both companies are owned by AutomatedQA and our teams relish helping developers deliver software reliably and with high quality. Smart Bear’s Code Collaborator tool helps development teams review code from anywhere, without the usual grunt-work and pain that often accompany peer code review.

Bugs cost 8-12 times less to fix if found in development rather than QA (and 30-100 times less if found in development rather than after release). And of course bugs found in development mean less time spent chasing them down for QA folks! Smart Bear believes so strongly in code review that they will send you a free book of code review tips. They conducted the world’s largest case study of peer code review with Cisco Systems, spanning 2500 code reviews, and the book presents these findings along with other peer review best practices.

If you know anyone who might be interested, please pass this information on to them -they can visit http://www.codereviewbook.com/?SWPnews to request a copy of their own!

Helpful Software Testing Tools and Templates

Below are some helpful software testing resources and templates to aid you in developing software solutions:

Thursday, January 7, 2010

Powerful Metrics for Testers

specific goals.

What are Powerful Metrics?

Metrics are simply a way to measure specific things you are doing, but you could create hundreds of irrelevant metrics that really don't move you any closer to solving your everyday problems. Powerful metrics are a small set of indicators that are targeted to help you accomplish a specific set of goals.

Metrics Driven by Specific Goals

Before defining your metrics, ask yourself "What goal(s) am I trying to accomplish?", then write your goals down. For example, typical goals for a tester might be to:

- Ensure that each new feature in the release is fully tested

- Ensure that our release date is realistic

- Ensure that existing features don't break with the new release

Now that we have our goals defined, let's figure out how we can create a set of metrics to help us accomplish them.

"Ensure that each new feature in the release is fully tested"

To do this, we must have assurance that we have enough positive and negative test cases to fully test each new feature. This can be done by creating a traceability matrix that counts the number of test cases, number of positive test cases and number of negative test cases for each requirement:

| Requirement | # Test Cases | # Positive | # Negative | Comments |

| Visual Studio Integration | 104 | 64 | 40 | Good Coverage |

| Perforce Integration | 0 | 0 | 0 | No Coverage |

| Enhanced Contact Management Reporting | 45 | 45 | 0 | No Negative Test Case Coverage |

| Contact Management Pipeline Graph | 10 | 8 | 2 | Too few test cases |

“Ensure that our release date is realistic”

To do this, we must have daily running totals that show us test progress (how many test cases have been run, how many has failed, how many has passed, how many are blocked). By trending this out, day-by-day, we can determine if we are progressing towards the release date.

Software Planner provides graphs that make this easy:

Another way to do it is to create a Burn down chart that spans the testing days. On the chart, it will decrement each day by the number of outstanding test cases still to be run (awaiting run, failed or blocked) and we can compare those totals to the daily expected number to determine if the release date is realistic. Let's assume we have a 2 week QA cycle, we can define the number of test cases to run and daily we can keep track of how many are still left to complete versus how many should be left to complete.

In the example above, we can see that we are not getting through the test cases quickly enough. On day 5, we are behind schedule by 15 test cases and we can see this clearly on the Burn down graph -- the red area (actual test cases left) is taller than the blue area (expected test cases left).

“Ensure that existing features don’t break with the new release”

To do this, we must run regression tests (could be manual or automated) that tests the old functionality. Note: This assumes we have enough test cases to fully test the old functionality, if we don’t have enough coverage here, we have to create additional test cases. With regression tests, you can normally stick to “positive test cases” with this group, unless you know of specific "negative test cases" that would be helpful to prevent common issues once in production.

To ensure you have enough of these test cases, it is a good idea to group the number of regression test cases by functional area. Let's assume we were developing a Contact Management system and we wanted to be sure we had regression test cases assigned to each functional area of that system:

| Functional Area | # Test Cases | Comments |

| Contact Management Maintenance | 25 | Good Coverage |

| Contact Management Reporting | 0 | No Coverage |

| Contact Management Import Process | 10 | Good Coverage |

| Contact Management Export Process | 5 | Coverage may be too light |

To determine if release dates are reasonable for your regression testing effort, use the same Burn down approach illustrated above.

Summary

As the new year begins, dedicate yourself to improving your job by identifying your goals and tracking metrics that help you determine how you are trending towards your goals. The metrics listed above work fine for my team but I would like to hear what metrics you find are helpful in your organization. To communicate that to me, please fill out this survey:

http://www.surveymonkey.com/s/DW656JZ.

If we get great feedback from this, we will publish the results in our next month's article so that we can all learn from each other.